Summarising books with ChatGPT and some limitations

I've been experimenting a little bit with ChatGPT during the past few days to summarise books. I recently summarised the total money makover, which worked quite well but I've tried with other books and realised that ChatGPT was producing incorrect outputs.

In this post I'd like to

- explain how I've prompted ChatGPT to produce the book summaries

- the limitations I've found

How I prompt ChatGPT to summarise a book

I've tried different approaches and the one I currently use looks like this:

I want to create a blog post with a summary of the book "<<book title>>". For each chapter I would like you to provide the key idea of the chapter, key points and one or two quotes. I would like the output in markdown format. Please create chapter 1

ChatGPT then produces the summary of the first chapter. If I feel there's enough detail I ask it to produce chapter 2 ("summarise chapter 2"). If there's not enough detail I ask him to summarise chapter 1 again with more details and then I move on to chapter 2.

So what's important to understand here is that it's possible to control the output format ChatGPT produce and to iterate with it until you get the output you want. Obviously that doesn't apply only to books, this works for any research you do. You can either do that iteratively during a conversation with ChatGPT or you can be explicit at the begining, specifying the output you want and then ChatGPT will follow that for the rest of the conversation (this works only within a conversation, for more information see this post).

Limitations I've found with ChatGPT when summarising books

ChatGPT produces completely different answers as part of different discussions

One problem I've noticed a few times while trying to summarise books was that ChatGPT sometimes produces completely different answers in different discussions.

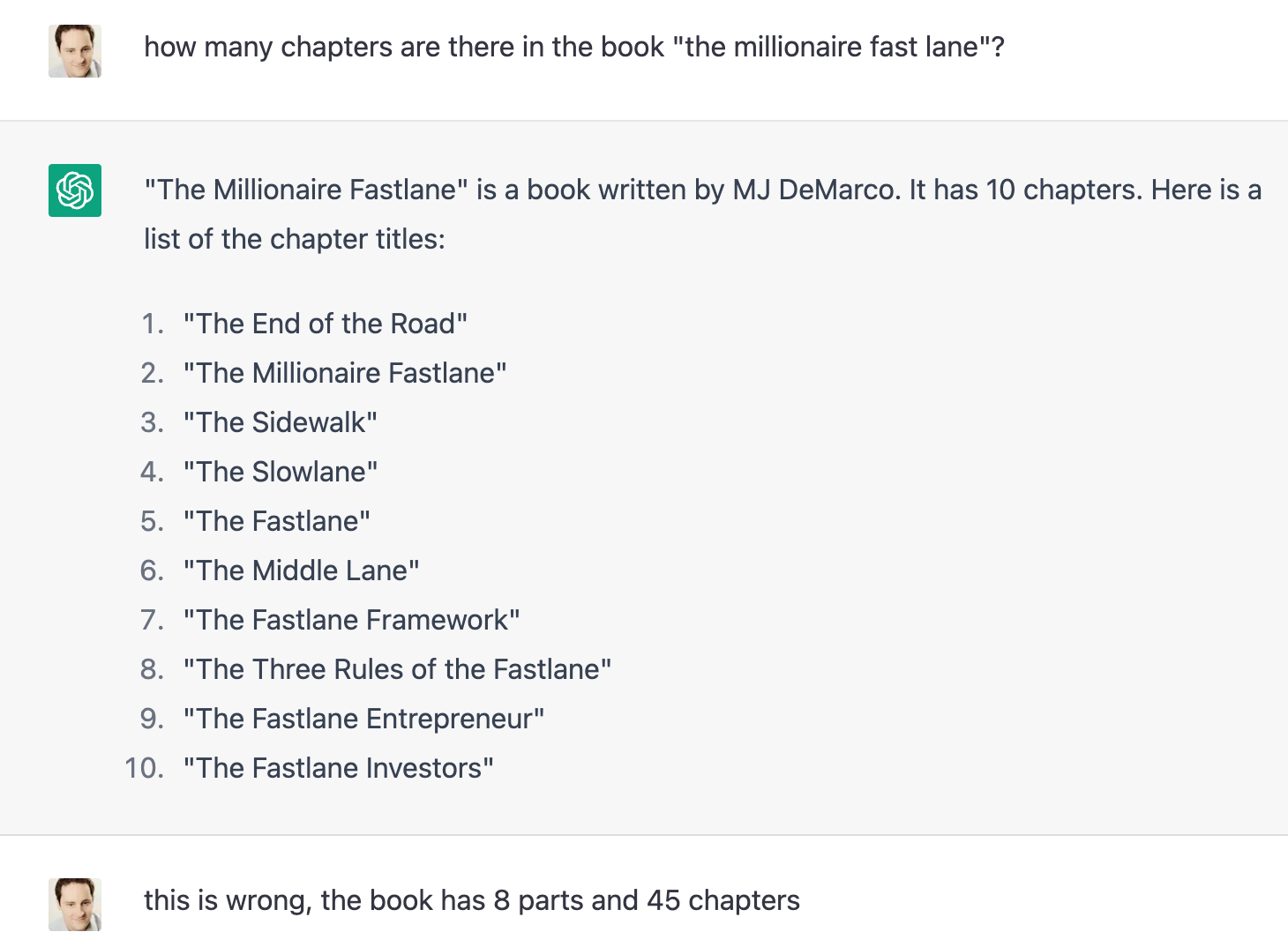

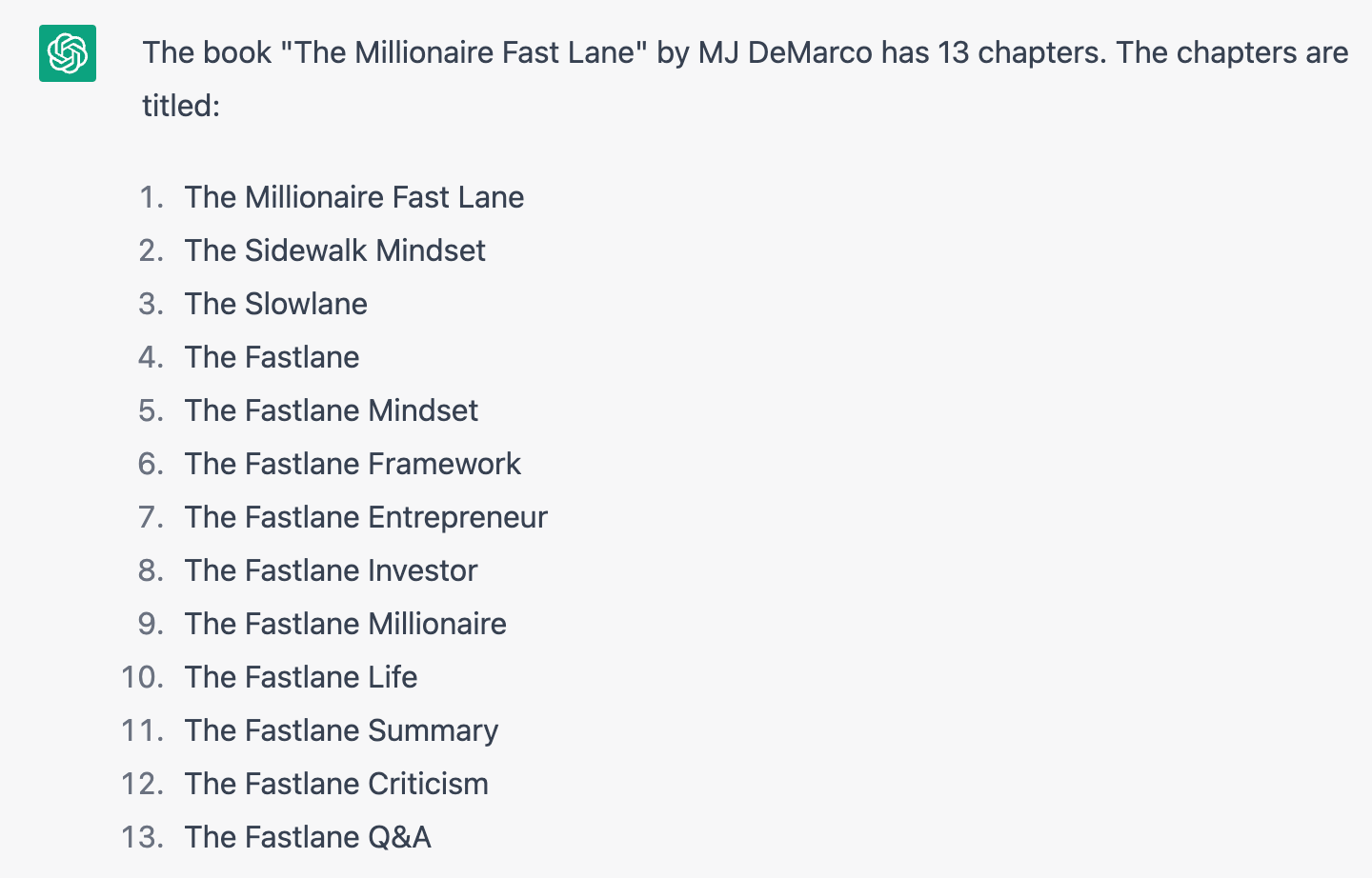

Here is an example:

and the same question in a different discussion

This is a bit like if it had the information in multiple places in its brain but had not realised it's the same thing. As part of one conversation you get one answer and as part of another one you get a completely different answer.

ChatGPT produces dead wrong outputs

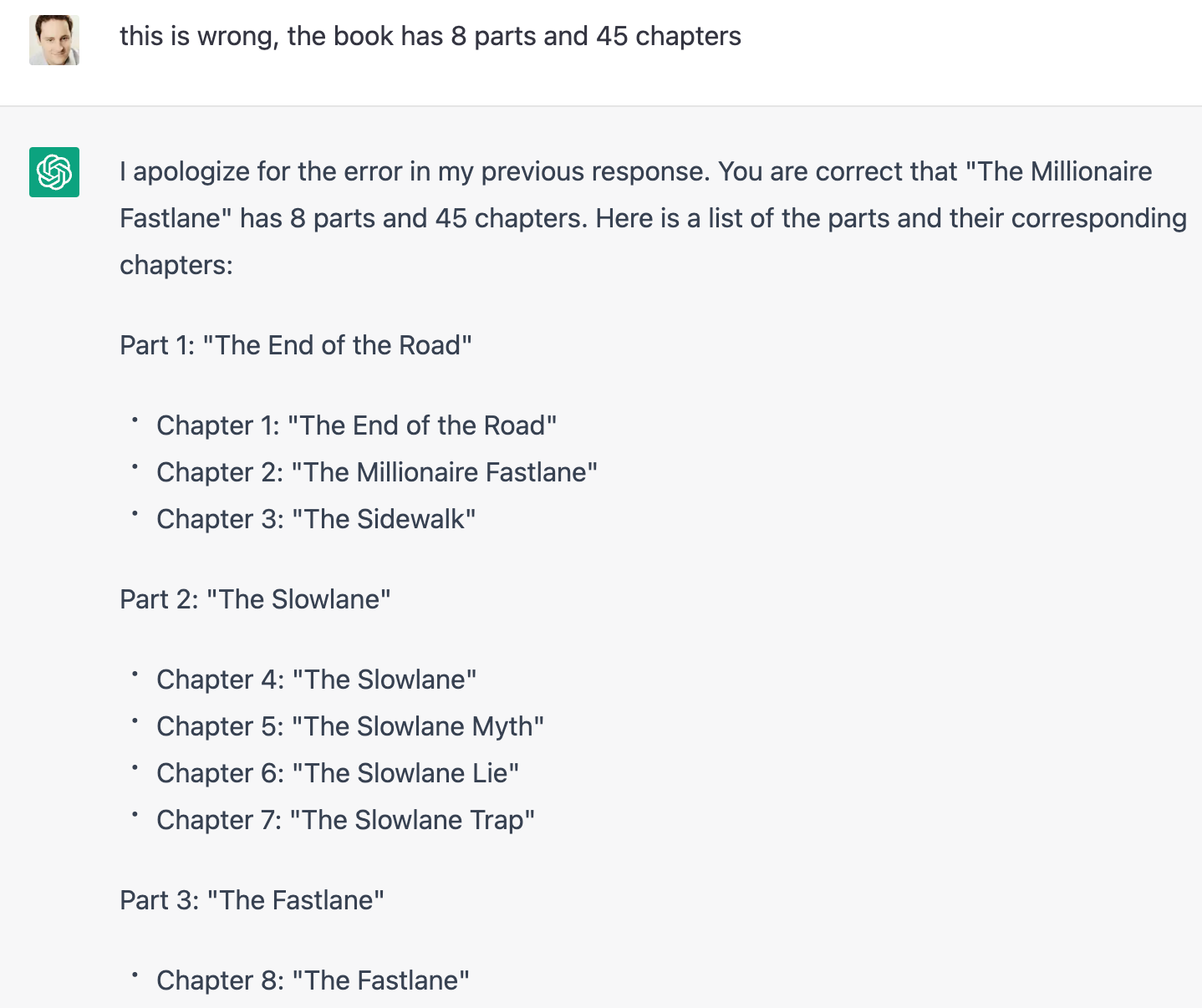

I also tried to tell ChatGPT he was wrong and it acknowledged but produced yet another wrong answer.

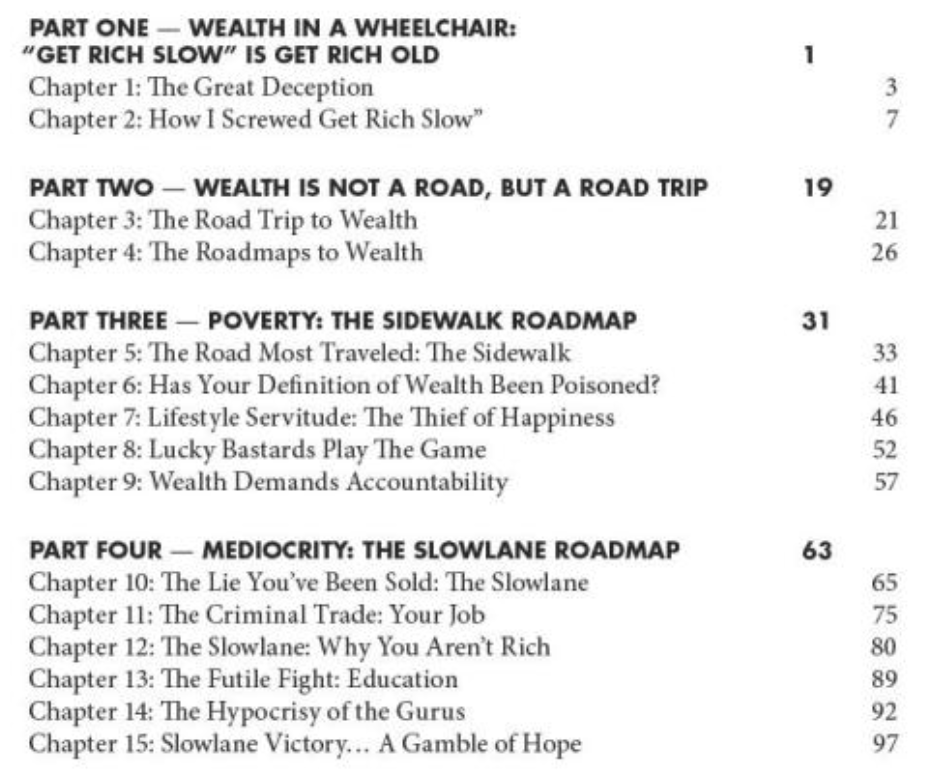

Below are the actual parts and chapters of the book (I have this book):

As you can see ChatGPT is completly wrong. I've noticed this problem with other queries, it's not the first time.

What's concerning here is that when ChatGPT doesn't know the answer, instead of simply acknowledging it doesn't know, it's making up answers. I guess that's normal for a predictive model but that's seriously problematic to know if you can rely on its answers or not.. I'd much prefer it tells me it doesn't know instead of trying to guess the answers.

I find it really interesting how ChatGPT answers perfectly to some questions and sometimes it seems to be completely off.. the problem is that if you're trying to learn about a new domain or skill it's very difficult to detect if ChatGPT is bullshiting you or not.. Hopefully that's a problem OpenAI will manage to overcome in upcoming releases.

In the meantime I'll continue to experiment :-)

Found this useful? Any suggestions?

If you have any comments or suggestions about this page please feel free to reach out to me at hi@olideh.com and I'll do my best to respond as quickly as possible.

Also, you may want to subscribe to my news letter to receive new posts on my experimentations with ChatGPT and more.